BARIATRIC CARE VR

Role: VR Developer / Research Assistant

Company: Victoria University of Wellington

Platform: Meta Quest 3

Engine: Unreal Engine 5

Duration: 10 months (full-time)

A virtual reality educational platform, Bariatric Care VR aims to address the significant health challenges faced by individuals living with extreme obesity (PlwO) in Aotearoa, particularly among Māori and Pacific Peoples. Bariatric Care VR consists of 3 learning modules: a guided tutorial, an interactive clinical risk-assessment exercise, and an immersive animated experience.

In this role, I collaborated closely with designers and healthcare professionals to ensure the educational content was both accurate and engaging. I delivered all aspects of the development process, including designing immersive environments, programming interactive features, and compositing visual elements. My work helped create a seamless and impactful learning experience that bridges the gap between clinical knowledge and practical training. This enables healthcare professionals to better understand and empathise with bariatric patients.

August 2024 - June 2025

TUTORIAL MODULE

The Tutorial module introduces users to the platform and its controls, particularly focusing on the Risk Assessment module. Testing showed that the majority of our stakeholders had never used virtual reality before, so we created the Tutorial to familiarise them with VR and the controls.

This module walks players through mechanics such as movement, picking up and dropping objects, opening and closing menus, and adding/removing hospital equipment from the room. It provides a no pressure environment for users to learn the controls, while removing the need to focus on educational content.

Responsive Visual Prompts

The tutorial module uses visual prompts to guide players step-by-step through each mechanic. Prompts appear in the environment to indicate where players should look, move, or interact, and dynamically respond to player input; progressing only once the correct action is completed. This helps ensure players fully engage with and understand each system before moving on.

RISK ASSESSMENT MODULE

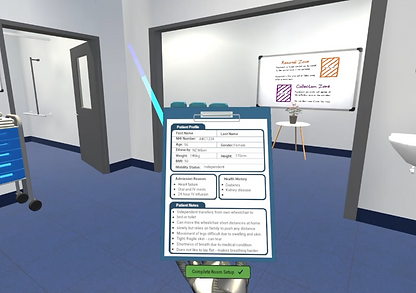

In the Risk Assessment module, players take on the role of healthcare staff and must set up a hospital room for an arriving patient. After receiving a phone call from the emergency department, users must use their patient's information (accessible via a 'clipboard' style menu) to populate the hospital room with the appropriate equipment for their patient. This encourages them to consider aspects such as mobility restrictions, weight limitations, and leaving enough safe working space to help their patient. Once player's are satisfied with their setup, they receive feedback on their choices and have the chance to revisit the hospital room.

Distance Grab/Move

I implemented distance grab and move functionality, allowing players to grab objects from afar, move them along the X and Y axes using the joystick, and rotate them for precise placement. The Z-axis remains fixed so objects stay naturally grounded. This system helped me practice input mapping, object manipulation, and building intuitive, responsive interactions in VR.

Visual Placement Feedback

I created a visual placement indicator that changes the object's color based on whether it can be placed in the current location. Objects turn green when the space is clear and red when overlapping with another object. This feedback system helped me practice collision detection and made equipment placement more intuitive and user-friendly.

Hand-Mounted Menus

Equipment can be removed from the environment by moving it to the designated “Removal Zone” in the corridor. Once placed in this area, the item despawns after a short delay. This simulates a real-world hospital system where equipment would be collected by yourself (the player) or another staff member, such as an orderly.

Equipment Removal

Equipment can be removed from the environment by moving it to the designated “Removal Zone” in the corridor. Once placed in this area, the item despawns after a short delay. This simulates a real-world hospital system where equipment would be collected by yourself (the player) or another staff member, such as an orderly.

Equipment Ordering & Collection

Players can order equipment using the hand-mounted equipment menu, which is organised into categories such as beds, chairs, bathroom, mobility, and furniture. Each item includes an image, name, safe working load, and relevant dimensions to support informed decision-making. To order an item, players simply select it from the menu. After a short delay to reflect real-world wait times which can range from 10 minutes to over an hour, the equipment arrives. A “delivery!” audio cue signals its arrival, and players can collect it from the designated Collection Zone in the corridor outside the

patient’s room.

Curtain Functionality

All curtains in the hospital room are fully interactable to support real-world accuracy. Players can use the trigger to toggle each curtain (left, middle, and right) between open and closed states. This allows them to set up the environment as they would in an actual clinical setting, down to the finer details of patient privacy and room preparation.

Feedback Menu

Once players complete their room setup, they can open the Feedback Menu via the patient clipboard. This hand-mounted menu provides detailed feedback on their equipment choices, indicating whether each item was appropriate, an acceptable alternative, or unsuitable for the patient’s needs. It also explains each decision and checks whether enough space was left for safe care of the patient. After reviewing the feedback, players can choose to revise their setup or, if they met the required criteria, continue to part two of the module.

EMPATHY TRAINING MODULE

The Empathy Training module is an immersive experience where users are placed in the shoes of the patient. From a patient's point of view, users experience what it feels like to have a fall, talk to paramedics, and go through the hospital process with a variety of staff interacting with them. This module is designed to help users better understand how scary and uneasy this can be for patients, encouraging them to approach their future patients with empathy and to do everything they can to help their patients feel more comfortable.

Empathy Experience Walkthrough

1. Welcome Loading Screen

3. Daughter calls an ambulance after you fall

5. Transfer to hospital loading screen

7. An empathetic nurse checks on you

2. Contextual Loading Screen

4. Paramedics arrive

6. An unempathetic nurse checks on you

8. Thank you & return to main menu loading screen

Subtitles

I implemented a subtitle system for the Bariatric Care VR project to improve accessibility and support users with hearing impairments or in noisy environments. This involved syncing on-screen text with voiceover dialogue and ensuring the subtitles were clear, readable, and non-intrusive within the VR experience.

I ran into several challenges while implementing this feature and tested multiple approaches before landing on a solution that met the needs of the project. I created a custom spring camera blueprint with an attached text render component, which I used as the camera for each scene before inputting the subtitles. This method allowed the subtitles to appear clearly in front of the player while maintaining the functionality I needed such as positioning (including being an appropriate distance from the player), font customisation, and the ability to time subtitles to sync with each line of dialogue.

Real World Reflections

To enhance realism and relatability, we ensured the professional uniforms in Bariatric Care VR closely matched those used in real healthcare settings in Aotearoa, New Zealand. While we did outsource our character models, we were still able to achieve this by altering the colour of the assets. This attention to detail helped make the experience feel more authentic and recognisable to users.

Left: Paramedic character assets used in Bariatric Care VR.

Right: New Zealand paramedic uniforms.

Left: Nurse character assets used in Bariatric Care VR.

Right: New Zealand nurse uniforms.

EXPERIENCE & SKILL DEVELOPMENT

Using Unreal Engine 5, I created immersive and accurate hospital environments, and programmed all functionality within the educational platform. Through extensive testing and debugging I ensured the application had high performance, quality and responsiveness. Collaboration with researchers and healthcare professionals ensured all content was accurate and well presented, creating a realistic learning experience that effectively addresses the challenges faced by individuals with extreme obesity and promotes empathy among healthcare staff.

Working on the Bariatric Care VR platform significantly improved my creative and technical skills within Unreal Engine, particularly in a virtual reality context. Over the course of the prototype's development, I gained hands-on experience designing realistic environments and programming responsive, intuitive interactions using Blueprints. As my first experience developing for virtual reality, it challenged me to think more spatially and empathetically by considering user comfort, presence, and accessibility at every stage. I began by exploring Unreal Engine’s VR template, using it to understand how key components were created, connected, and called within Blueprints. From there, I deepened my understanding through hands-on application, programming the mechanics required for the educational platform and independently researching solutions to bridge any gaps in my knowledge.

Through close collaboration with healthcare professionals, I learned how to translate real-world clinical processes and cultural considerations into meaningful virtual experiences. Crafting hospital and home environments that reflect New Zealand's healthcare context taught me the importance of cultural specificity and representation in design, especially in educational and empathy-focused applications. For me, this reinforced how thoughtful, context-aware design choices can deepen user engagement and create more impactful learning outcomes.

Taking on multiple roles throughout the project - from programming, to asset creation/sourcing, to sound designer, to compositor - gave me a practical understanding of the VR development pipeline and how each role contributes to creating a cohesive, immersive experience. I believe this experience will make me a more effective team member, as it has given me a broader understanding of my collaborators' roles and the time, skill, and decision-making involved in

their work.